Join the DZone community and get the full member experience.

While RDBMS (Relational Database Management systems) have been popular for decades (and will continue to remain so), in recent time, we have seen the emergence and acceptance of databases/datastores that are not based on relational technology concepts. Such databases are typically called NoSQL, with the team initially meaning "Not SQL," but has come to mean "Not only SQL." When the NoSQL database was released, they did not support the popular SQL interface. Each of the databases had their own API for data access and manipulation, but with the passage of time, a few solutions were developed on top of existing NoSQL databases that supported the SQL interface. For example, the Hive datastore was created on top of HDFS (Hadoop Distributed File System) to provide a "table-like" interface to data and it supports many features of SQL 92. Similarly, though HBase is a column store, an SQL interface was defined. This interface is Phoenix.

When news reporters write about Non-Resident Indians or about Americans of Indian origin (or for that matter, any person of Indian origin, now settled in another country), they use the phrase “You can take the XYZ out of India, but you cannot take India out of XYZ.” Looking at the SQL interfaces provided for NoSQL databases, I would like to coin the phrase, “You can take a programmer out of SQL, but you cannot take SQL out of the programmer.”

Phoenix is an open source SQL skin for HBase. You use the standard JDBC APIs instead of the regular HBase client APIs to create tables, insert data, and query your HBase data. It enables OLTP and operational analytics in Hadoop for low latency applications by combining the best of both worlds:

Apache Phoenix is fully integrated with other Hadoop products such as Spark, Hive, Pig, Flume, and Map Reduce. It takes an SQL query, compiles it into a series of HBase scans, and orchestrates the running of those scans to produce regular JDBC result sets. Direct use of the HBase API, along with coprocessors and custom filters, results in performance on the order of milliseconds for small queries, or seconds for tens of millions of rows.

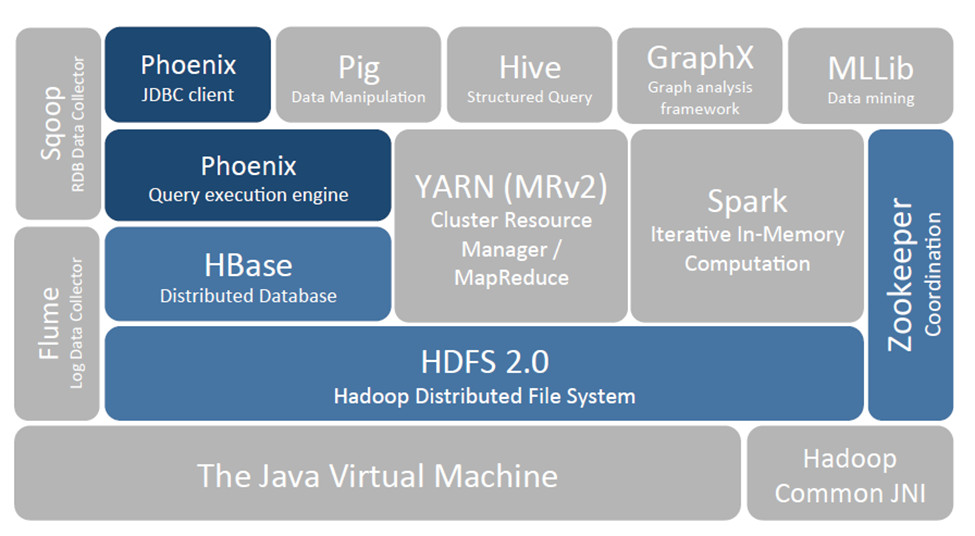

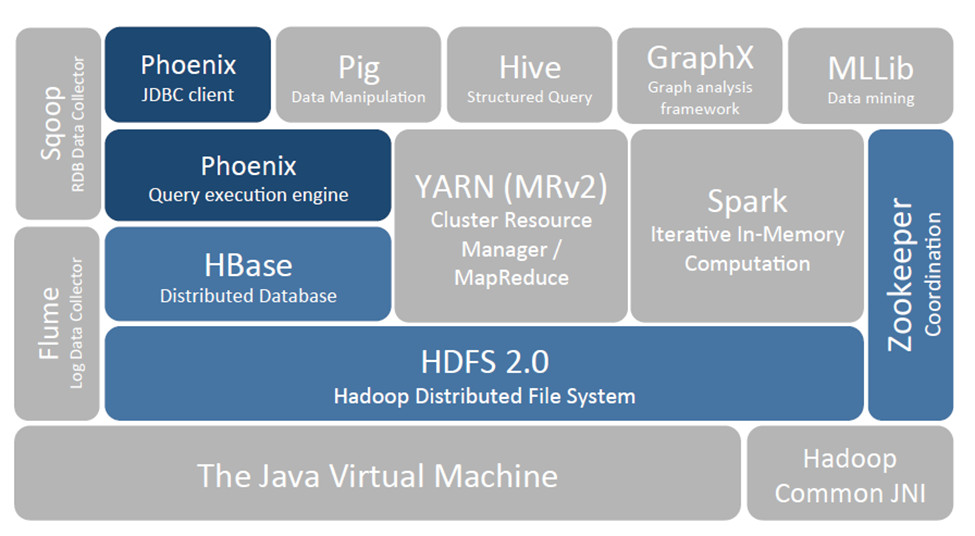

Place in the Hadoop ecosystem:

Figure 1: Phoenix and HBase in the Hadoop Ecosystem

At first glance, given that Phoenix is an SQL skin on top of HBase, it is assumed that using it will result in slower access as compared to direct HBase API access, but in most cases, it has been noted that Phoenix achieves as good or likely better performance than if we hand-coded it ourselves (in addition to the advantage of reduced code development). This is achieved by:

One of the biggest advantages of using Phoenix is that it provides access to HBase using an interface that most programmers are familiar with, namely SQL and JDBC. Some of the other advantages of using Phoenix are:

Architecturally, Phoenix works by using two components, namely a server component and a client component. The client component is nothing but the Phoenix JDBC driver and is used by each client that connects to HBase using Phoenix. On the server side, a Phoenix component resides on each RegionServer in HBase.

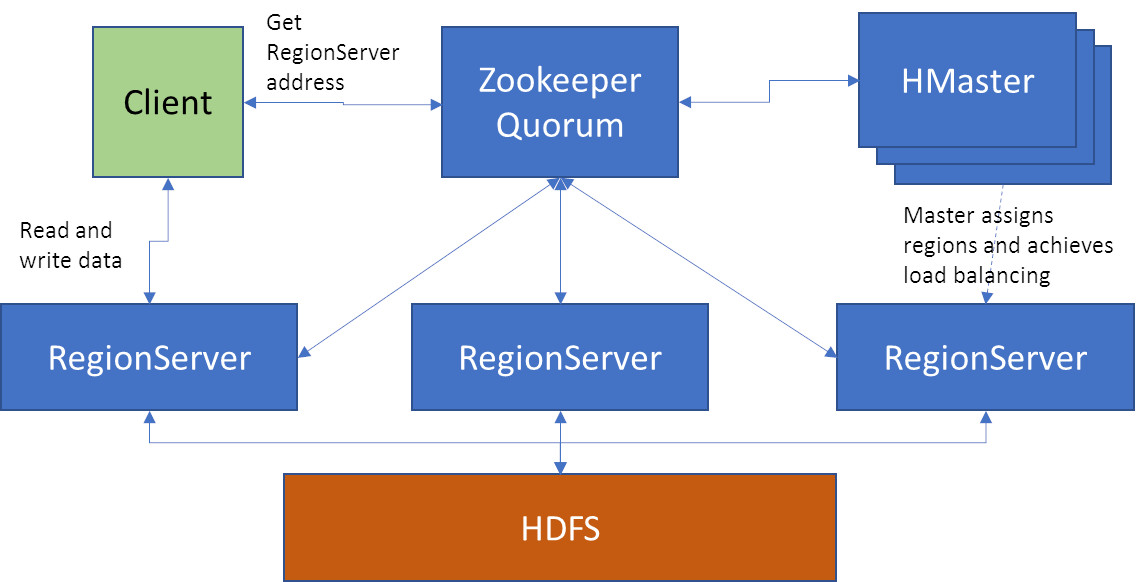

Let us consider the following HBase cluster architecture:

Figure 2: Sample Architecture

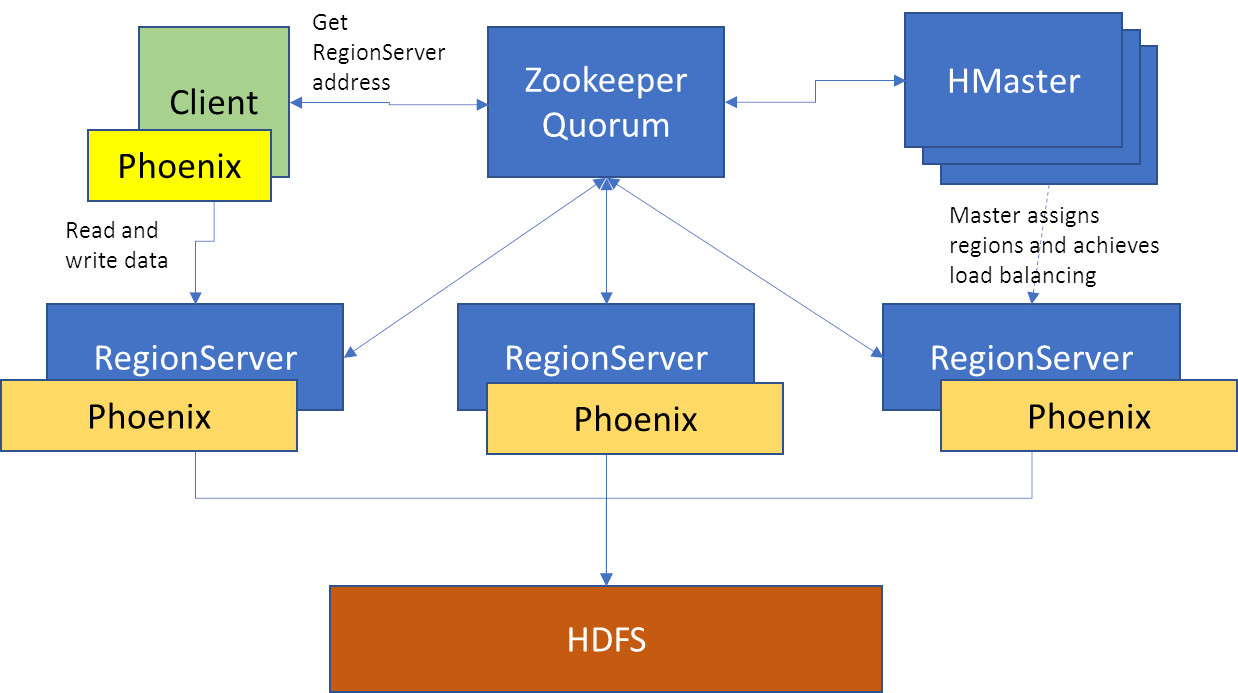

When Phoenix is brought into the picture, we have to note that there are two components — a server-side component that resides on the each RegionServer, and the client-side JDBC library. This diagram depicts the server side component.

Figure 3: Sample Architecture with Phoenix server-side component

To access a Phoenix enabled HDFS database, we need to use the Phoenix JDBC client library. When using the JDBC library, the architecture is as depicted:

Figure 4: Sample architecture with Phoenix client-side component

Let us now consider a simple example using the Phoenix API.

To run the example, we need to include the Phoenix client jar containing the JDBC classes, for example, we can use phoenix-4.1.0-client-hadoop2.jar.

import java.sql.*; import java.util.*; public class PhoenixExample < public static void main(String args[]) throws Exception < Connection conn; Properties prop = new Properties(); Class.forName("org.apache.phoenix.jdbc.PhoenixDriver"); conn = DriverManager.getConnection("jdbc:phoenix:localhost:/hbase-unsecure"); System.out.println("got connection"); ResultSet rst = conn.createStatement().executeQuery("select * from BMARKSP"); while (rst.next()) < System.out.println(rst.getString(1) + " " + rst.getString(2) + " " + rst.getString(3) + " " + rst.getString(4) + " " + rst.getString(5) + " " + rst.getString(6)); >> >When compared to the example presented in "HBase, Phoenix, and Java Part 1," accessing the data stored in HBase is way simpler using Phoenix.

It can seamlessly integrate with HBase, Pig, Flume, and Sqoop.

A few limitations of Phoenix:

Two more examples that show how to use Phoenix:

Database Relational database sql Data (computing) Apache Phoenix Java (programming language) Open source Filter (software)

Opinions expressed by DZone contributors are their own.

Architecture and Code Design, Pt. 1: Relational Persistence Insights to Use Today and On the Upcoming Years